Resnext的梳理

整理:张一极 2020·0326·22:08

援引论文的一句话:

In an Inception module, the input is split into a few lower-dimensional embeddings (by 1×1 convolutions), transformed by a set of specialized filters (3×3, 5×5, etc.), and merged by concatenation

在inception中,我们提到了堆叠网络块的组合,对于特征深度有很好的作用,遵循一个stm规则:

split-transform-merge

split指的是,一个特征图,经过多个分组的卷积通道,提取不同感受野下的特征,

transform指的是,多个尺寸的卷积,对于特征图进行的变换过程,

最后的merge指的是,分组卷积之后的合并过程。

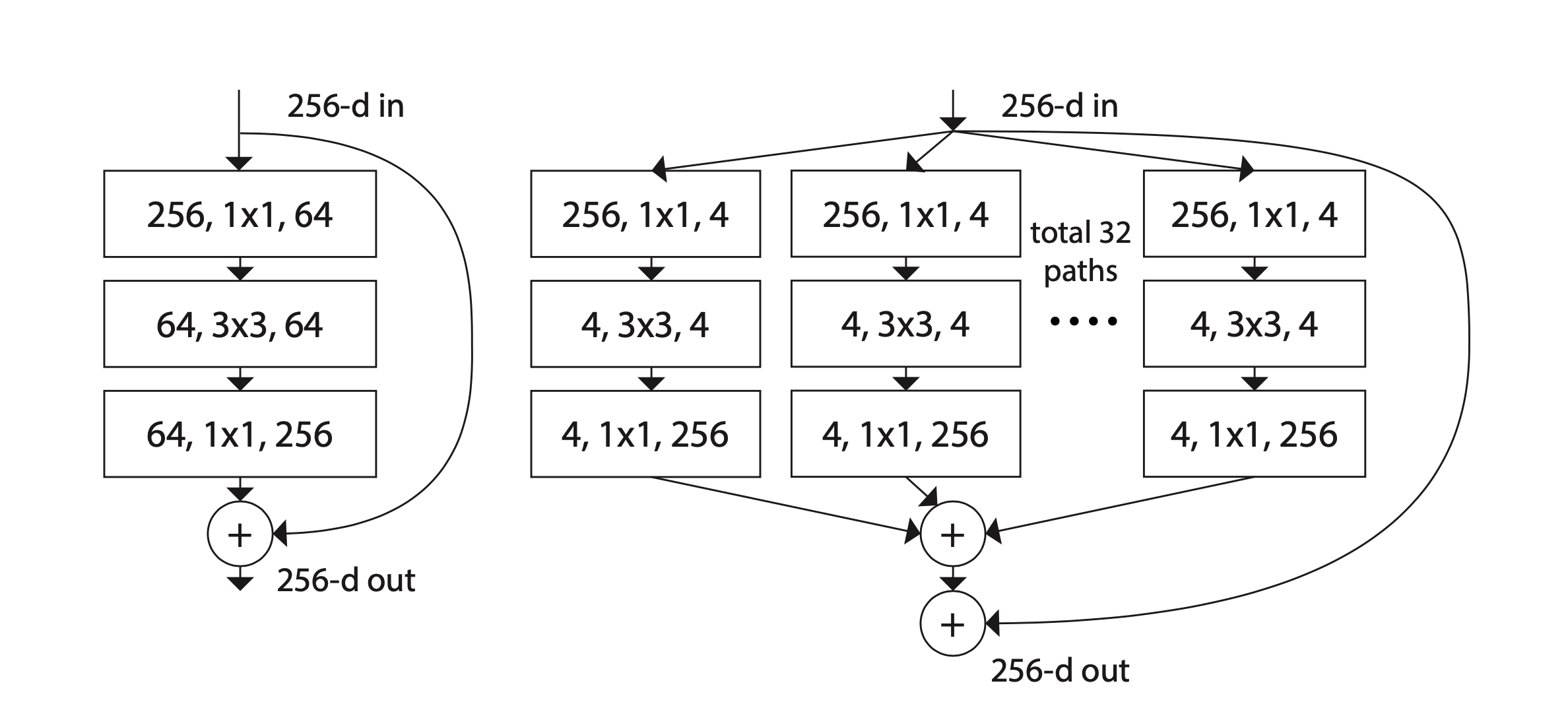

如下图:

细节:

Multi-branch convolutional networks. The Inception models [38, 17, 39, 37] are successful multi-branch ar- chitectures where each branch is carefully customized. ResNets [14] can be thought of as two-branch networks where one branch is the identity mapping. Deep neural de- cision forests [22] are tree-patterned multi-branch networks with learned splitting functions.

多组通路,inception是多通道卷积,每一条支路都精心打磨,resnet是两条path,一条是主干,另一条支路,负责映射特征,Resnext的结构是多重通路,同一结构。

Grouped convolutions. The use of grouped convolutions dates back to the AlexNet paper [24], if not earlier. The motivation given by Krizhevsky et al. [24] is for distributing the model over two GPUs. Grouped convolutions are sup- ported by Caffe [19], Torch [3], and other libraries, mainly for compatibility of AlexNet. To the best of our knowledge, there has been little evidence on exploiting grouped convo- lutions to improve accuracy. A special case of grouped con- volutions is channel-wise convolutions in which the number of groups is equal to the number of channels. Channel-wise convolutions are part of the separable convolutions in [35].

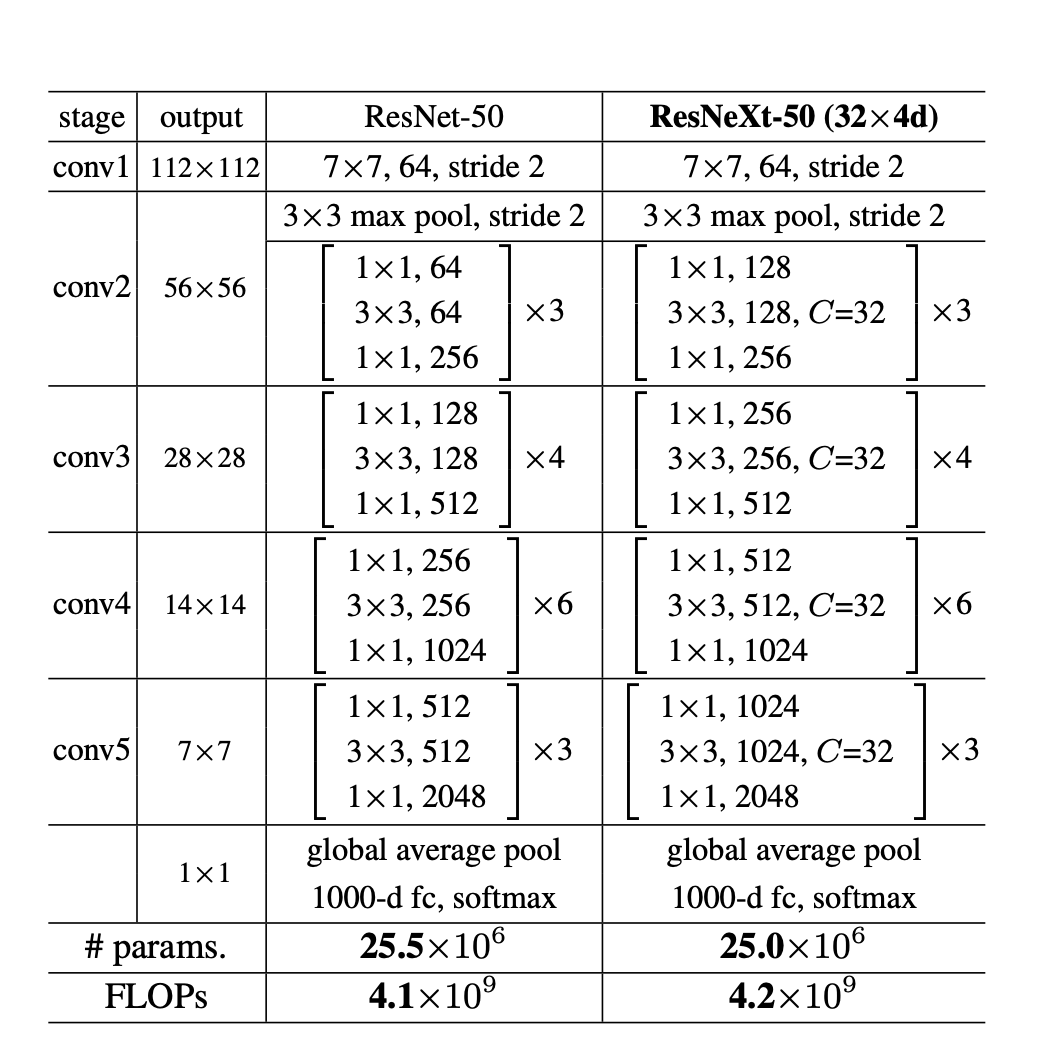

网络结构:

原先的resnet是通过三层的卷积层,输出一个统一尺寸的特征,现在是32组卷积通道,最后叠加:

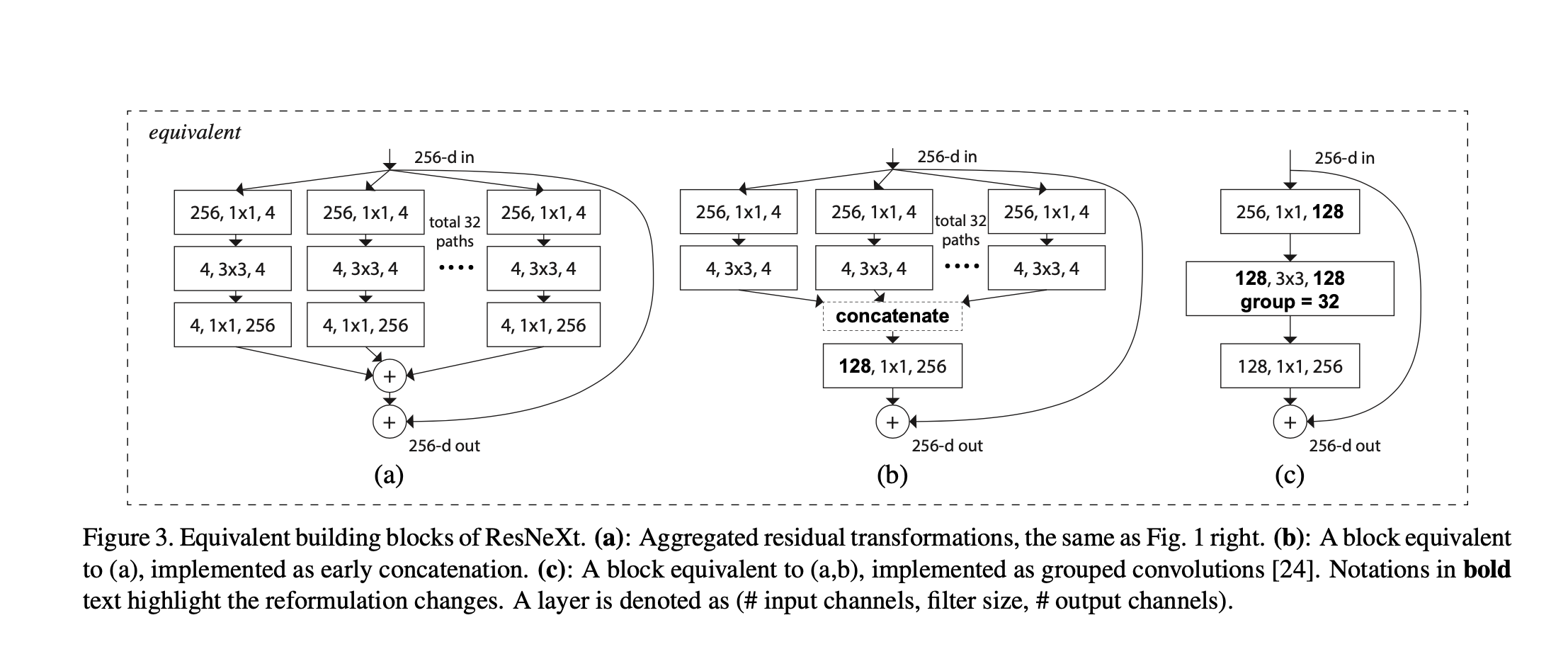

a和b的区别在于,把4x32个卷积操作,合并成了一个128个卷积核的卷积操作,在数学上是相等的。

从参数方面来看分组卷积的优势:

inputchannel = 128

outputchannel = 128 参数量:128x128x3x3=147456

kernel_size = 3x3

Input channel = 64

Output_channel = 64

Kernel_size.= 3x3

Group = 2

参数量:64x64x3x3x2=73728

减少了一半的参数量,不可谓不精妙。

Code:

xxxxxxxxxx1layer_1 = 1282layer_2 = 1283layer_3 = 2564三个block的不同尺度下的输出通道数目5 self.split_transforms = nn.Sequential(6 nn.Conv2d(in_channels, 128, kernel_size=1, groups=C, bias=False),7 nn.BatchNorm2d(128),8 nn.ReLU(inplace=True),9 nn.Conv2d(128, 128, kernel_size=3, stride=stride, groups=C, padding=1, bias=False),10 nn.BatchNorm2d(128),11 nn.ReLU(inplace=True),12 nn.Conv2d(128, out_channels * 4, kernel_size=1, bias=False),13 nn.BatchNorm2d(out_channels * 4),14 )15 self.shortcut = nn.Sequential()#无需降采样的情况16 if stride != 1 or in_channels != out_channels * 4:17 self.shortcut = nn.Sequential(18 nn.Conv2d(in_channels, out_channels * 4, stride=stride, kernel_size=1, bias=False),19 nn.BatchNorm2d(out_channels * 4)20 )21 def forward(self, x):22 return F.relu(self.split_transforms(x) + self.shortcut(x))网络的定义和resnet基本没什么区别:

xxxxxxxxxx1class ResNext(nn.Module):23 def __init__(self, block, num_blocks, class_names=100):4 super().__init__()5 self.in_channels = 6467 self.conv1 = nn.Sequential(8 nn.Conv2d(3, 64, 3, stride=1, padding=1, bias=False),9 nn.BatchNorm2d(64),10 nn.ReLU(inplace=True)11 )1213 self.conv2 = self.make_layer(block, num_blocks[0], 64, 1)14 self.conv3 = self.make_layer(block, num_blocks[1], 128, 2)15 self.conv4 = self.make_layer(block, num_blocks[2], 256, 2)16 self.conv5 = self.make_layer(block, num_blocks[3], 512, 2)17 self.avg = nn.AdaptiveAvgPool2d((1, 1))18 self.fc = nn.Linear(512 * 4, 100)19 def make_layer(self, block, num_block, out_channels, stride):20 """Building resnext block21 Args:22 block: block type(default resnext bottleneck c)23 num_block: number of blocks per layer24 out_channels: output channels per block25 stride: block stride26 27 Returns:28 a resnext layer29 """30 strides = [stride] + [1] * (num_block - 1)31 layers = []32 for stride in strides:33 layers.append(block(self.in_channels, out_channels, stride))34 self.in_channels = out_channels * 43536 return nn.Sequential(*layers)37 def forward(self, x):38 x = self.conv1(x)39 x = self.conv2(x)40 x = self.conv3(x)41 x = self.conv4(x)42 x = self.conv5(x)43 x = self.avg(x)44 x = x.view(x.size(0), -1)45 logits = self.fc(x)46 probas = F.softmax(logits, dim=1)47 return logits,probasTime elapsed: 186.09 min Epoch: 002/010 | Batch 0000/25000 | Cost: 1.2423 Epoch: 002/010 | Batch 0050/25000 | Cost: 1.6971 Epoch: 002/010 | Batch 0100/25000 | Cost: 1.5043 Epoch: 002/010 | Batch 0150/25000 | Cost: 3.7873 Epoch: 002/010 | Batch 0200/25000 | Cost: 0.9755 Epoch: 002/010 | Batch 0250/25000 | Cost: 0.8241 Epoch: 002/010 | Batch 0300/25000 | Cost: 0.3721 Epoch: 002/010 | Batch 0350/25000 | Cost: 1.3209 Epoch: 002/010 | Batch 0400/25000 | Cost: 1.5591 Epoch: 002/010 | Batch 0450/25000 | Cost: 0.4816 Epoch: 002/010 | Batch 0500/25000 | Cost: 1.1595 Epoch: 002/010 | Batch 0550/25000 | Cost: 1.0004 Epoch: 002/010 | Batch 0600/25000 | Cost: 2.2381 Epoch: 002/010 | Batch 0650/25000 | Cost: 1.0034 Epoch: 005/010 | Batch 13500/25000 | Cost: 0.2052 Epoch: 005/010 | Batch 13550/25000 | Cost: 2.2128 Epoch: 005/010 | Batch 13600/25000 | Cost: 0.0544 Epoch: 005/010 | Batch 13650/25000 | Cost: 0.1864 Epoch: 005/010 | Batch 13700/25000 | Cost: 1.7534